1 1. Definition and scope

1.1 Artificial Intelligence (AI) is technology that enables a computer to think or act in a more ‘human’ way. It does this by taking in data, and deciding its response based on algorithms.

1.2 In this policy, generative AI is being referred to. The Department for Education (2023) defines it as:

“Technology that can be used to create new content based on large volumes of data that models have been trained on. This can include audio, code, images, text, simulations, and videos.”

1.3 This policy draws upon advice from HM Government, Department of Education (DfE), Joint Council for Qualifications (JCQ), Advance HE, and from academics based in UK and international higher education providers

1.4 This policy applies to the use of AI by all employees and students at the College.

2. Principles

The following underlying principles have guided the procedures within this policy:

2.1 AI poses opportunities and challenges for the education sector. The College will make the best use of opportunities, build trust, and mitigate challenges to protect integrity, safety and security.

2.3 AI tools can make tasks quicker and easier. They generate routine information that would take a human much longer. AI meets the parameters set for it by users, therefore users need to be skilled in asking effective questions.

2.4 Using AI tools can improve comprehension and retention of key concepts, reduce frustration and motivate and engage the users (Chen, Chen and Lin 2020 and DfE 2023).

2.4 Having access to AI is not a substitute for having knowledge because humans cannot make the most of AI without knowledge to draw upon. We learn how to write good prompts for AI tools by writing clearly and understanding the subject; we sense check the results if we have a schema against which to compare them (University of Exeter 2023). AI is not a replacement for effective teaching, learning or professional development activities.

2.5 Information generated by AI is not always accurate or appropriate, so users need skills to verify, analyse, evaluate and adapt material produced by AI tools.

2.6 AI tends to be developed by a specific demographic; therefore, it could perpetuate a onedimensional view. Cultural differences and a range of voices may not be generated by AI tools. Users need to be aware of this and the potential for bias in AI output.

2.7 Personal and sensitive data entered into AI tools might be shared with unknown parties, posing a security risk and potential data breach.

3. Roles, Responsibilities and Procedures

3.1 Students

3.1.1 Students may use AI to support their studies, provided text generated is:

• Checked for validity, accuracy, reliability and relevance.

• Free from bias or prejudice and used with integrity.

• Critically evaluated, like any other information source.

• Referenced correctly in-text and in final references.

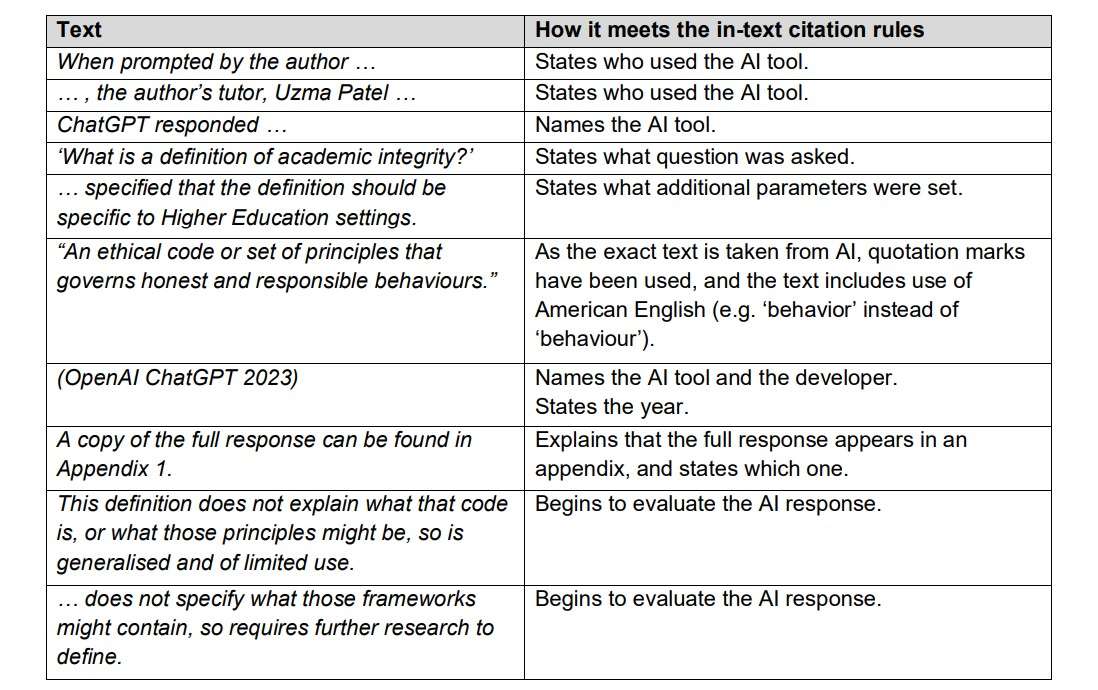

In-Text Citations

3.1.2 The in-text citation must follow these rules:

State who used the AI tool.

• Name the AI tool and the developer.

• State what question was asked, and any additional parameters set.

• State the year the question was asked/parameters set.

• Explain that the full response appears in an appendix, and state which one – ensure the appendix contains everything generated by the AI tool on this occasion.

• Evaluate the AI response.

• If text is taken directly from AI, quotation marks must be used. The text must be exact, including errors or use of American English.

3.1.3 In-text citation example 1:

When prompted by the author of this assignment, ChatGPT responded to the question, ‘What is a definition of academic integrity?’ with the following:

“An ethical code or set of principles that governs honest and responsible behavior.” (OpenAI ChatGPT 2023)

A copy of the full response can be found in Appendix 1.

This definition does not explain what that code is, or what those principles might be, so is generalised and of limited use

3.1.4 In-text citation example 2:

The author’s tutor, Uzma Patel, used a different AI tool and specified that the definition should be specific to Higher Education settings. This returned the following response:

“Academic integrity in higher education refers to the ethical and moral framework that guides the behavior of students, faculty, researchers, and staff within colleges and universities.” (Google Bard 2023).

A copy of the full response can be found in Appendix 2

This refers to frameworks, and who they apply to, but does not specify what those frameworks might contain, so requires further research to define.

3.1.5 Table 1 below contains analysis of examples used in paragraphs 3.1.3 and 3.1.4, to show how each part of the text in the examples meets the citation rules.

Table 1: Analysis of examples